PAPER

Code

The code is released as a SIBR framework project at: gitlab.inria.fr/sibr/projects/outdoor_relighting

Video

Abstract

We propose the first learning-based algorithm that can relight images in a plausible and controllable manner given multiple views of an outdoor scene. In particular, we introduce a geometry-aware neural network that utilizes multiple geometry cues (normal maps, specular direction, etc.) and source and target shadow masks computed from a noisy proxy geometry obtained by multi-view stereo. Our model is a three-stage pipeline: two subnetworks refine the source and target shadow masks, and a third performs the final relighting. Furthermore, we introduce a novel representation for the shadow masks, which we call RGB shadow images. They reproject the colors from all views into the shadowed pixels and enable our network to cope with inacuraccies in the proxy and the non-locality of the shadow casting interactions. Acquiring large-scale multi-view relighting datasets for real scenes is challenging, so we train our network on photorealistic synthetic data. At train time, we also compute a noisy stereo-based geometric proxy, this time from the synthetic renderings. This allows us to bridge the gap between the real and synthetic domains. Our model generalizes well to real scenes. It can alter the illumination of drone footage, image-based renderings, textured mesh reconstructions, and even internet photo collections.

Funding and Acknowledgements

Supplemental Materials and Results

Document

Supplemental : Multi-view Relighting Using a Geometry-Aware NetworkResults

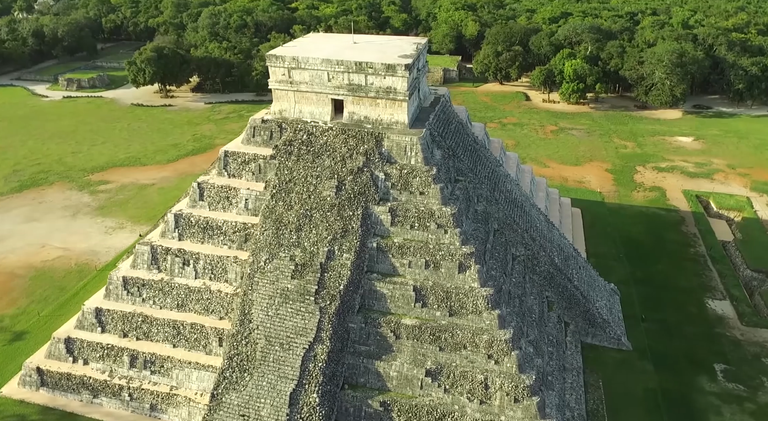

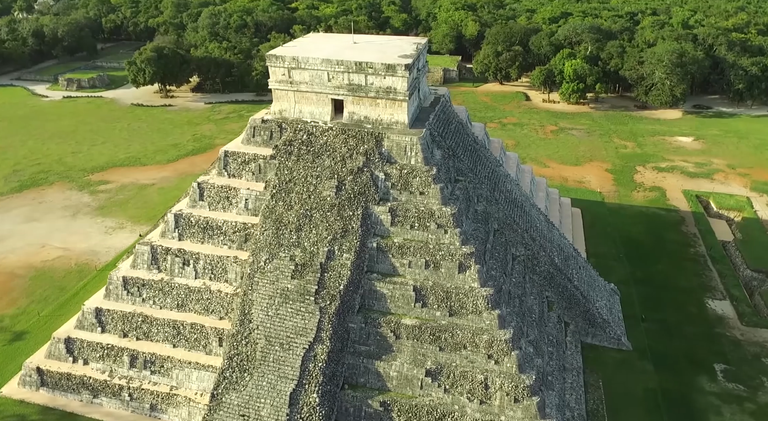

Chichen

Chichen Eilenroc

Eilenroc Monastere place

Monastere place Montalban

Montalban Ruins

Ruins Russian church

Russian church Saintfelix

Saintfelix Stonehenge

StonehengeAblations

Eilenroc

Eilenroc Russian church

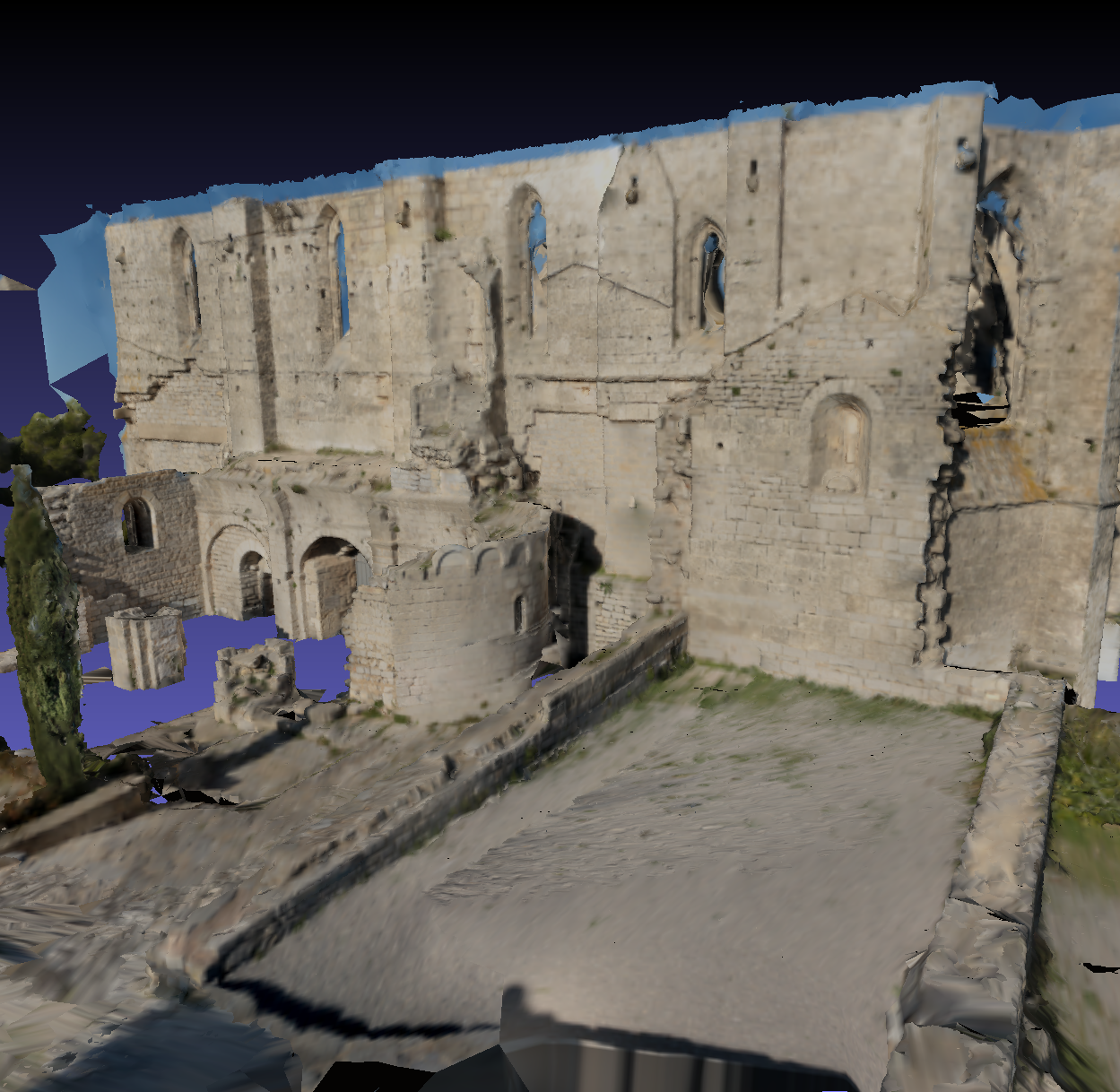

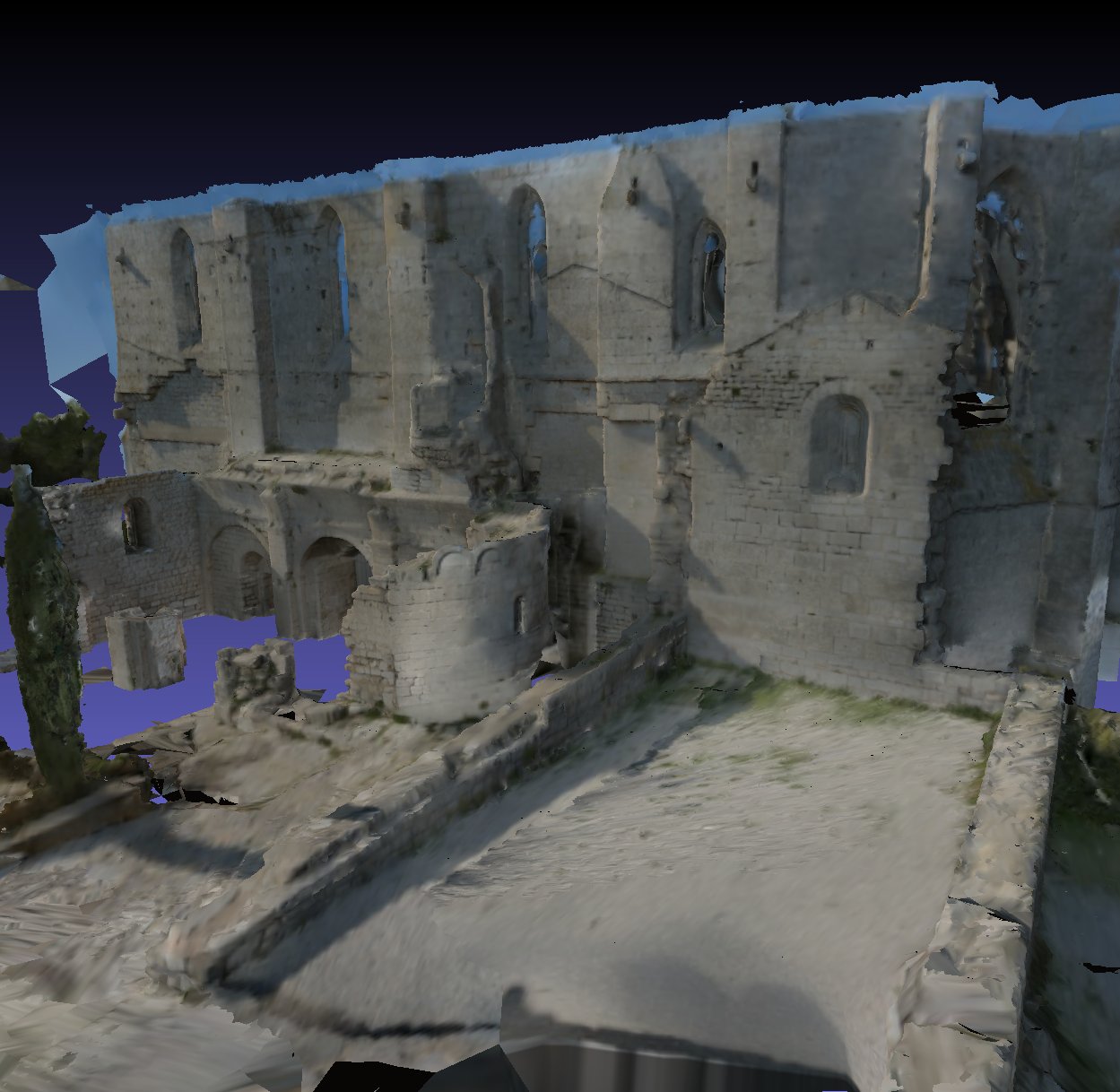

Russian churchTextured Meshes

Textured Mesh Original

Textured Mesh Original Textured Mesh Relit 1

Textured Mesh Relit 1 Textured Mesh Relit 2

Textured Mesh Relit 2Test Scenes

Arena

Arena Chichen

Chichen Eilenroc

Eilenroc Manarola

Manarola Monastere

Monastere Mont Alban

Mont Alban Ruins

Ruins Russian Church

Russian Church Saint Anne

Saint Anne Saint Felix

Saint Felix Stonehenge

Stonehenge