Abstract

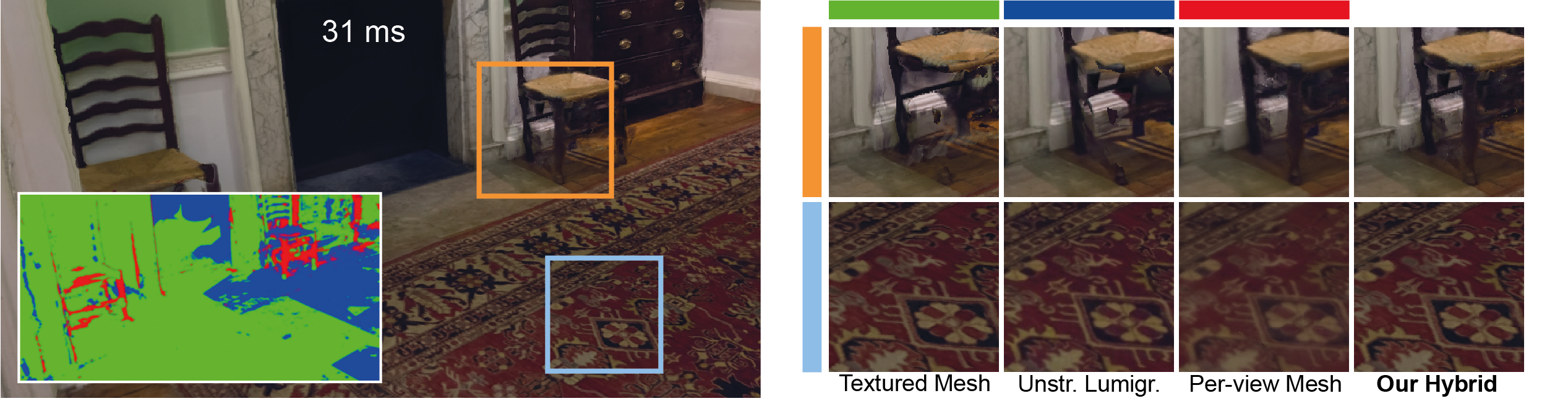

Image-based rendering (IBR) provides a rich toolset for free-viewpoint navigation in captured scenes. Many methods exist, usually with an emphasis either on image quality or rendering speed. In this paper we identify common IBR artifacts and combine the strengths of different algorithms to strike a good balance in the speed/quality tradeoff. First, we address the problem of visible color seams that arise from blending casually-captured input images by explicitly treating view-dependent effects. Second, we compensate for geometric reconstruction errors by refining per-view information using a novel clustering and filtering approach. Finally, we devise a practical hybrid IBR algorithm, which locally identifies and utilizes the rendering method best suited for an image region while retaining interactive rates. We compare our method against classical and modern (neural) approaches in indoor and outdoor scenes and demonstrate superiority in quality and/or speed.

Paper

Video

I3D 2021 Presentation

Code

The code is released as a SIBR framework project at: https://gitlab.inria.fr/sibr/projects/hybrid_ibr