Recent work has demonstrated that Generative Adversarial Networks (GANs) can be trained to generate 3D content from 2D image collections, by synthesizing features for neural radiance field rendering. However, most such solutions generate radiance, with lighting entangled with materials. This results in unrealistic appearance, since lighting cannot be changed and view-dependent effects such as reflections do not move correctly with the viewpoint. In addition, many methods have difficulty for full, 360◦ rotations, since they are often designed for mainly front-facing scenes such as faces

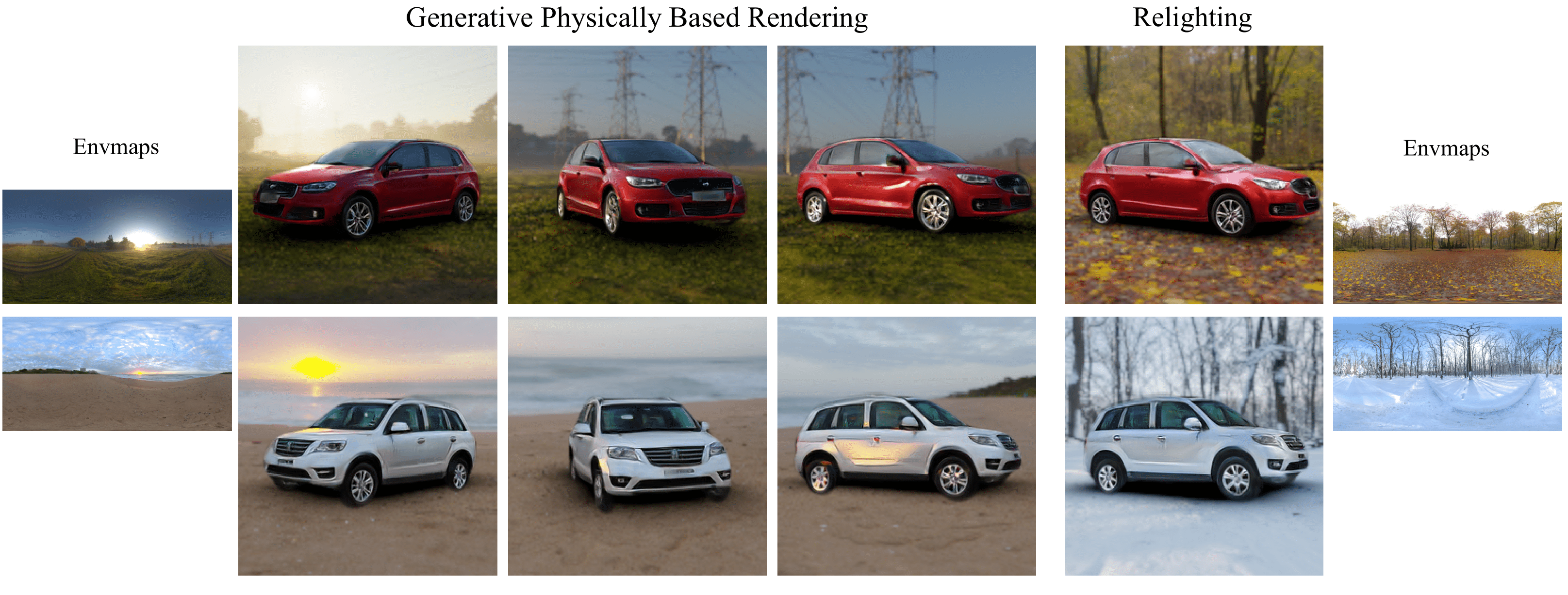

We introduce a new 3D GAN framework that addresses these shortcomings, allowing multi-view coherent 360◦ viewing and at the same time relighting for objects with shiny reflections, which we exemplify using a car dataset. The success of our solution stems from three main contributions. First, we estimate initial camera poses for a dataset of car images, and then learn to refine the distribution of camera parameters while training the GAN. Second, we propose an efficient Image-Based Lighting model, that we use in a 3D GAN to generate disentangled reflectance, as opposed to the radiance synthesized in most previous work. The material is used for physically-based rendering with a dataset of environment maps. Third, we improve the 3D GAN architecture compared to previous work and design a careful training strategy that allows effective disentanglement. Our model is the first that generatea variety of 3D cars that are multi-view consistent and that can be relit interactively with any environment map

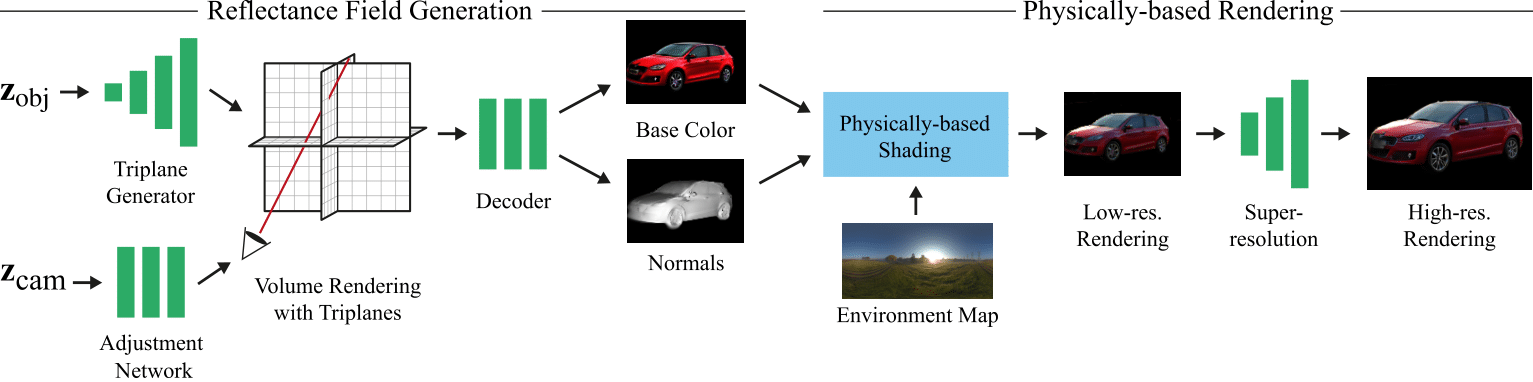

Our method is divided into two main steps. First, we sample a random vector \(\mathbf{z}_\textrm{obj}\in \mathbb{R}^{512}\) and generate a volumetric representation of triplane features. During training, we also sample a camera from the estimated poses of the training data, and apply our camera adjustment with latent \(\mathbf{z}_\textrm{cam}\in \mathbb{R}^{512}\). At inference, the camera is set by the user and the adjustment is not applied. To render from a specific camera viewpoint, we cast a ray for each pixel and sample positions along the ray to compute their corresponding triplane features, which are decoded into base color and density using a lightweight decoder MLP. The base color and density are volume-rendered. We also compute the surface normals by backpropagating the density through the decoder w.r.t to the position of the sample. Second, we sample an environment map from our dataset and shade our car representation using the efficient physically-based approximation of a microfacet BRDF. We render the images at \(128\times 128\) for efficiency (and memory constraints during training) and then apply a super-resolution network to reach \(256\times 256\). Green denotes trainable neural networks and blue fixed functions.

Our method enables interactive physically-based rendering (PBR) with 3D GANs under arbitrary illumination conditions in the form of environment maps and unconstrained camera navigation, including 360◦ rotations. We achieve this with three main contributions: a method to estimate poses of a dataset of car images, a generative pipeline for PBR, and an improved generative network architecture and training solution. We only require a dataset of images of the desired object class (cars in our case) and a dataset of environment maps for training. Our method learns a disentangled representation of shading, enabling relighting with high-frequency reflections on shiny car bodies.

@Article{violante2024lighting_3D_cars,

author = {Violante, Nicolás and Gauthier, Alban, and Diolatzis, Stavros and Leimkühler, Thomas and Drettakis, George},

title = {Physically-Based Lighting for 3D Generative Models of Cars},

journal = {Computer Graphics Forum (Proceedings of the Eurographics Conference)},

number = {2},

volume = {43},

month = {April},

year = {2024},

url = {https://repo-sam.inria.fr/fungraph/lighting-3d-generative-cars/}

}

This research was funded by the ERC Advanced grant FUNGRAPH No 788065. The authors are grateful to Adobe for generous donations, NVIDIA for a hardware donation, the OPAL infrastructure from Université Côte d'Azur and for the HPC/AI resources from GENCI-IDRIS (Grants 2022-AD011013898 and AD011013898R1). We thank A. Tewari and A. Bousseau for insightful comments on a draft.