(18MB)

(233MB)

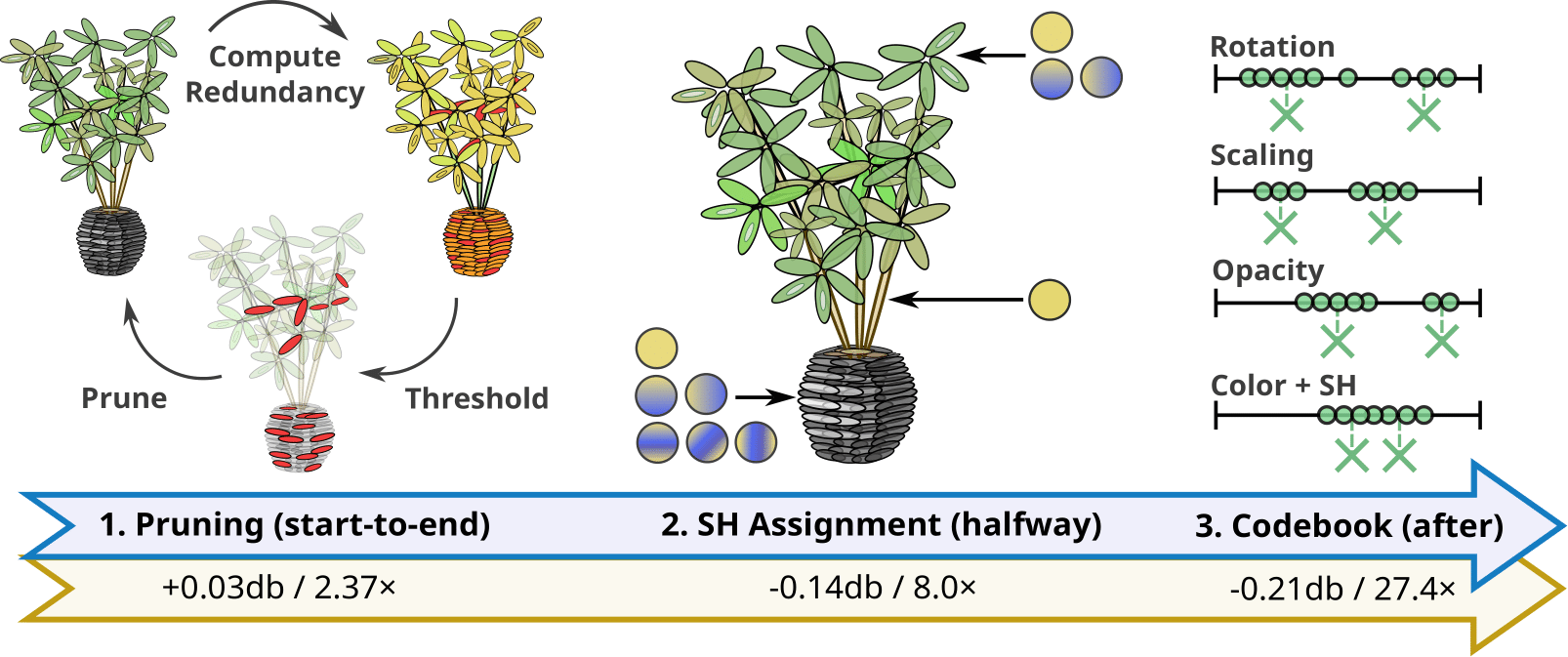

3D Gaussian splatting provides excellent visual quality for novel view synthesis, with fast training and real-time rendering; unfortunately, the memory requirements of this method for storing and transmission are unreasonably high. We first analyze the reasons for this, identifying three main areas where storage can be reduced: the number of 3D Gaussian primitives used to represent a scene, the number of coefficients for the spherical harmonics used to represent directional radiance, and the precision required to store Gaussian primitive attributes.

We present a solution to each of these issues. First, we propose an efficient, resolution-aware primitive pruning approach, reducing the primitive count by half. Second, we introduce an adaptive adjustment method to choose the number of coefficients used to represent directional radiance for each Gaussian primitive, and finally a codebook-based quantization method, together with a half-float representation for further memory reduction. Taken together, these three components result in a 27 reduction in overall size on disk on the standard datasets we tested, along with a 1.7 speedup in rendering speed. We demonstrate our method on standard datasets and show how our solution results in significantly reduced download times when using the method on a mobile device.

Our approach to reduce the memory requirements of the 3DGS representation consists of three methods. A primitive pruning strategy that works during optimization, an SH band assignment that happens once, halfway during the optimization and a codebook quantization that is applied as post-processing. These result in a reduced number of primitives, smaller footprint per primitive and on disk compression, respectively.

We tested our algorithm on the MipNerf360 [Barron 2022], DeepBlending [Hedman 2018] and Tanks&Temples [Knapitsch 2017] datasets. We manage to reduce the memory footprint of the representation with minimal reduction in the metrics scores.

Below you can find the find the videos included in the supplemental comparing our method to the baseline, paired with a slider for interactive comparison.

Below we show image visual comparisons between our method and Baseline, as well as MeRF [Reiser 2023], a NeRF model that can be used in low end devices.

These following concurrent works were released at the same time as ours:

LightGaussian: Unbounded 3D Gaussian Compression with 15x Reduction and 200+ FPS

EAGLES: Efficient Accelerated 3D Gaussians with Lightweight EncodingS

Compact3D: Compressing Gaussian Splat Radiance Field Models with Vector Quantization

Compressed 3D Gaussian Splatting for Accelerated Novel View Synthesis

@Article{PapantonakisReduced3dgs,

author = {Papantonakis, Panagiotis and Kopanas, Georgios and Kerbl, Bernhard and Lanvin, Alexandre and Drettakis, George},

title = {Reducing the Memory Footprint of 3D Gaussian Splatting},

journal = {Proceedings of the ACM on Computer Graphics and Interactive Techniques},

number = {1},

volume = {7},

month = {May},

year = {2024},

url = {https://repo-sam.inria.fr/fungraph/reduced_3dgs/}

}

This research was funded by the ERC Advanced grant FUNGRAPH No 788065. The authors are grateful to Adobe for generous donations, NVIDIA for a hardware donation, the OPAL infrastructure from Université Côte d’Azur.

[Hedman 2018] Hedman, P., Philip, J., Price, T., Frahm, J.M., Drettakis, G. and Brostow, G., 2018. Deep blending for free-viewpoint image-based rendering. ACM Transactions on Graphics (TOG), 37(6), pp.1-15.

[Barron 2022] Barron, Jonathan T., et al. "Mip-nerf 360: Unbounded anti-aliased neural radiance fields." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022.

[Knapitsch 2017] Knapitsch, Arno, et al. "Tanks and temples: Benchmarking large-scale scene reconstruction." ACM Transactions on Graphics (ToG) 36.4 (2017): 1-13.

[Reiser 2023] Reiser, Szeliski, et al. "MERF: Memory-Efficient Radiance Fields for Real-time View Synthesis in Unbounded Scenes" ACM Transactions on Graphics (ToG) 42.4 (2023), pp. 1-12